- December 06 2023

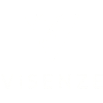

A/B testing in ecommerce: How to get it right?

Ecommerce companies are actively seeking ways to enhance their conversion rates and boost sales. However, with numerous variables at play, determining which changes will have the most significant impact can be a complex task.

One prevalent mistake observed among ecommerce companies is their reliance on guesswork or intuition when implementing alterations to their website and outreach strategies.

This is where A/B testing enters the picture. A/B testing in ecommerce enables companies to make decisions based on data, thus optimizing their conversion rates. In this article, learn how ecommerce companies can improve their conversion rates by incorporating A/B testing into their conversion rate optimization (CRO) arsenal.

Choosing the parameters for experimentation and crafting hypotheses

Every aspect, from the copy to the layout and configuration, can be tested. You should focus on identifying the areas with the greatest potential for improving conversions.

- Gather and analyze feedback from customers, customer service, sales, and support teams

- Conduct customer surveys to obtain specific feedback

- Examine analytics data

- Utilize tools such as heatmaps to track visitor behavior

- Conduct user testing to gain insight into the entire visitor experience

Creating a hypothesis is a crucial step in the A/B testing process. A tightly constructed A/B test hypothesis will get you closer to the solution.

It involves identifying what aspects of your website, marketing campaign, or user experience you want to improve. Then create educated guesses, or hypotheses, about how changes to those aspects will impact user behavior.

Selecting variables to test

Start by identifying specific elements or variables that you want to test. These could include elements on your website such as recommendation strategies, headlines, product descriptions, images, call-to-action (CTA) buttons, checkout processes, or even pricing strategies. In marketing campaigns, variables might involve ad copy, visuals, targeting parameters, etc.

Prioritize elements that are critical to the user’s decision-making process or those that have shown room for improvement through analytics or user feedback. Create a priority table in a spreadsheet where you can rank them.

Formulating hypotheses

Once you’ve selected the variables, you need to create hypotheses, which are informed guesses about how changes to these variables will impact user behavior.

A hypothesis typically consists of two parts: the null hypothesis (H0) and the alternative hypothesis (H1). The null hypothesis (H0) states that there is no significant difference between the control group (A) and the experimental group (B) when you make changes to the selected variable.

The alternative hypothesis (H1) proposes that there is a statistically significant difference between the control and experimental groups resulting from the changes made to the variable. This is what you hope to prove through your A/B test.

Setting up the A/B Test

Once you’ve formulated your hypothesis, you can proceed to set up your A/B test. This involves creating two versions of the webpage or marketing materials: one where the variable remains unchanged (control group) and one with the proposed change (variable group).

You then direct a portion of your audience to each group and track their interactions and conversions. Statistical analysis is used to determine if the results support your alternative hypothesis (H1) and reject the null hypothesis (H0).

How to plan your A/B testing

Planning your A/B test involves meticulous consideration of the sample size and test duration.

By calculating the appropriate sample size, considering the duration in light of various factors, and monitoring the test closely, you can ensure that your A/B test yields meaningful and actionable insights for optimizing your website, marketing campaigns, or user experiences.

Ideal sample size in A/B testing

The sample size is a critical factor because it directly affects the reliability and statistical significance of your results. An insufficient sample size can lead to inconclusive or unreliable outcomes.

Calculating the ideal sample size depends on several factors, including the desired level of confidence (usually set at 95%) and the expected effect size (how big of a difference you want to detect).

There are online calculators and statistical tools available to help you determine the appropriate sample size for your test.

Statistical significance is the probability that your test will correctly detect a real difference between the control and variable groups when such a difference exists. Low statistical significance can result in missed opportunities to detect meaningful changes.

Segmentation in A/B testing

Depending on your goals, you may need to consider segmenting your audience. For example, if you have different user demographics or customer segments, you may want to ensure that each segment has a sufficient sample size for meaningful analysis.

How long should you run an A/B test?

The duration of your A/B test should be carefully planned to account for various factors such as:

- Seasonality: Consider whether there are any seasonal patterns or external events that might affect your results. You may need to run the test for a full business cycle to capture these variations.

- Sample size accumulation: Ensure that you have enough time to accumulate the required sample size while maintaining the test’s integrity. If your website or campaign has low traffic, it may take longer to gather sufficient data.

- Stability: It’s essential to run the test long enough to capture variations in user behavior. Rushing a test or prematurely ending it can lead to unreliable results.

- Learning curve: In some cases, users may need time to adapt to changes. For instance, if you’re testing a website redesign, users might take a few days to get used to the new layout before their behavior stabilizes.

Monitoring A/B tests in progress to eliminate outliers and yield reliable results

Throughout the test, you must continuously monitor the data to ensure that the test is running smoothly and that there are no unexpected issues. If you notice anomalies or irregularities, you may need to make adjustments or even extend the duration of the test to account for these factors.

Evaluating and drawing insights from test results

Analyzing and interpreting ecommerce A/B test results involves a combination of statistical analysis, a focus on pre-decided key metrics, user behavior, and a commitment to continuous improvement.

- Data procurement and cleaning: This involves removing outliers, addressing missing data points, and verifying that the data is representative of your target audience.

- Utilize statistical analysis: This includes significance tests, effect size, and confidence intervals. In most cases, a significance level of 95% (p-value < 0.05) is used, indicating that there is a 95% confidence that the observed differences are not due to random chance.

- Tracking you defined key ecommerce metrics: This includes click-through rate, cart abandonment rate, purchase rate, average order value, etc. to assess A/B test impact.

- Segmentation: Segment audiences to understand varying responses and analyze user behavior.

- Iterative A/B testing: Learn from your A/B tests and implement your findings into your subsequent testing to reap long-term benefits in your CRO efforts.

- Effective communication: Make informed decisions and communicate results effectively to all stakeholders.

Zalora, an online fashion and lifestyle retailer brand, created a seamless search and discovery experience by using the Discovery Suite of Visenze, which includes A/B testing for product recommendations. This resulted in an increase in engagement rate by more than 10% and an increase in average order value by 15%.

Trends in ecommerce A/B testing for the future

Personalization and mobile optimization are essential trends in ecommerce A/B testing.

Giants such as Amazon and Google use A/B testing to tailor content and improve the mobile user experience, ultimately driving engagement and revenue.

Personalization Testing

Let’s take an example of Amazon’s Product Recommendations. Amazon extensively uses personalization testing through A/B testing to enhance the customer experience.

How it works:

Users see product recommendations based on their past browsing and purchase history. A/B testing is employed to compare different algorithms and strategies for suggesting products.

For instance, they might test whether showing “Frequently Bought Together” products

is more effective than “Recommended for You” products for a specific user segment.

Amazon analyzes click-through rates, conversion rates, and revenue generated to determine which recommendation strategy works best.

Mobile Optimization

Google’s shift to mobile-first indexing is a great example of the importance of mobile optimization. While not an ecommerce site itself, Google’s search results play a critical role in driving traffic to ecommerce websites.

How it works:

Google prioritizes the mobile version of websites over desktop versions in its search rankings. Websites that are not optimized for mobile can see a drop in search rankings.

ecommerce companies must optimize their websites for mobile to maintain or improve their visibility on Google.

A/B testing comes into play when determining the most effective mobile optimization strategies.

For example, an ecommerce site may A/B test two different mobile site designs – one with a simplified checkout process and another with a prominent search bar.

Boost conversion rates and improve your customer journey by iteratively A/B testing your recommendations using Visenze

With ViSenze’s A/B testing, you can now know which recommendation strategy is working better!

Our native A/B testing tool helps you test between different ViSenze recommendations.

You can now create two versions of a recommendation carousel with a central hypothesis behind the difference. These two versions are displayed to two similarly sized audiences over a period of time, say, at least two weeks. The results can then be analyzed, usually leading to a winner that is switched as the default.

Here are the metrics that are compared in an A/B test:

- Session Engagement Rate

- Clickthrough Rate

- Variant Add-to-cart Rate

- Variant Conversion Rate

- Average Order Value

No more ‘going with gut feeling’. You can run as many experiments as you feel like and evaluate them with the correct data to make the best decisions. With ViSenze‘s recommendations and A/B testing, you can ensure increased conversions, AOV, and revenue!

In Conclusion

A/B testing is an iterative journey, where ongoing optimization and refinement become the norm, helping ecommerce businesses adapt and flourish in an ever-evolving digital landscape.

By methodically testing one variable at a time, whether it is recommendation strategies, product images, CTAs, or checkout processes, companies can pinpoint the precise impact of changes and refine their strategies accordingly. Moreover, maintaining an adequate sample size and test duration ensures the statistical significance of results, preventing hasty conclusions.

With these principles in mind, ecommerce companies can harness the power of A/B testing to enhance user experiences, boost conversions, and ultimately thrive in the competitive online marketplace.

Try ViSenze Discovery Suite for free to improve user journey and boost conversions!